Twitter is useful for a lot of things. One of those things is information. I wanted to see if I could pull out the trash from the information. Answer, actually a really simple classifier with only about 300 labeled tweets can do a pretty good job. Right now it has no real interface except some output. I stopped work on the interface because it should be done in a web-technology, not processing.

Right now it will output something like the following, based on the last 20 public tweets:

btw, giving is def. hands down better than receiving . time to rest . gonna pray/talk with God . goodnight & Godbless world of twitter :] charLength: 137 atCount: 0 punctuation: 9 linkCount: 0 RT: 0 percentCap: 1 A27 Almere richting Utrecht: Tussen knooppunt Almere-Stad en knooppunt Eemnes 7 km http://twurl.nl/wbrmuu charLength: 105 atCount: 0 punctuation: 7 linkCount: 1 RT: 0 percentCap: 6 Sun K Kwak and her black tape installations http://tinyurl.com/c495jd charLength: 69 atCount: 0 punctuation: 5 linkCount: 1 RT: 0 percentCap: 4 A27 Gorinchem richting Utrecht: Tussen afrit Lexmond en afrit Hagestein 4.7 km http://twurl.nl/qqhimi charLength: 101 atCount: 0 punctuation: 7 linkCount: 1 RT: 0 percentCap: 5 Engadget Update: Sony PSP hacked for use as PC status monitor: No money for a secondary display.. http://tinyurl.com/dkteuk charLength: 124 atCount: 0 punctuation: 10 linkCount: 1 RT: 0 percentCap: 7 TechRepublic - Fast Video Indexer 1.12 (Windows) http://bit.ly/4n8ZYp charLength: 69 atCount: 0 punctuation: 9 linkCount: 1 RT: 0 percentCap: 11 6/20 were informative

It decided 6 of those 20 were informative, based on the following six features:

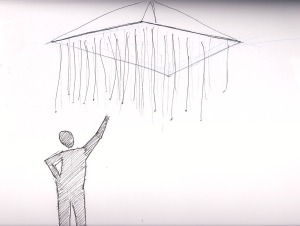

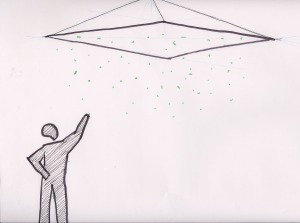

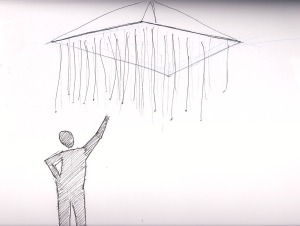

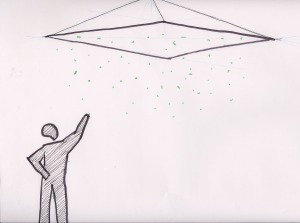

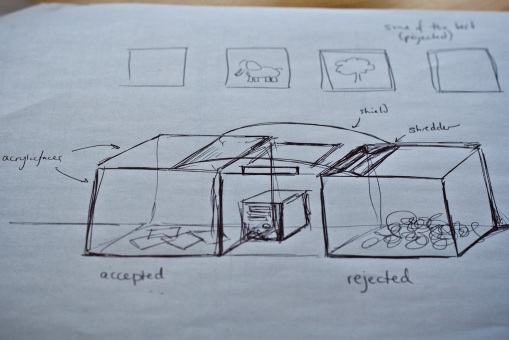

The idea is similar to Patrick’s box (“what they left behind” from Assignment 1). Here, instead of objects, people will submit drawings. A drawing is passed into a rolling scanner that sends the image to the computer, which then decides whether to accept it or reject it. There are two large transparent boxes, one for accepted drawings and another for the rejected. However, when a drawing is rejected, it’ll be sent through a shredder(!) before being dumped in the reject bin… On the wall behind this contraption, we’ll project 5 best drawings so far, just as a curator would display them at a museum.

We hope to see that people try to “learn” what the curator likes and does not like, and try to get their art accepted.

There are a couple machine learning components. We want the curator to have a set of criteria for judging the drawings. First, it will have a pre-trained classifier that defines the curator’s “taste”. But also, as people submit drawings, it will redefine its taste for a couple reasons: 1. it gets sick of “things” it’s seen too many e.g. stick figures, and starts to hate them. 2. it starts to like elements from the more recent drawings (following the “trend”) if there seems to be too few acceptances. The latter also would help with the fact that

We have not decided exactly what the pre-trained classifier would be trained on. A couple possibilities are: 1. a puzzle e.g. has to have 2. whatever we like.

Another idea is to have colored papers and/or some pre-drawn figures on the paper to promote certain kinds of drawings. The colored papers would make the installation more visually appealing.

Things to build: two boxes (need doors to empty out the bins if needed), a mechanical “switch” (probably a teflon cookie sheet with a cervo) connected to the computer (probably through arduino)

Things to program/learn: the predefined classifier, how to change strategies over time (with no particular goal, or just to balance the bins)

Other work: getting a scanner to activate the computer… another option is to use a camera, but the same problem persists.

Victor Vilchis and Laura Paoletti

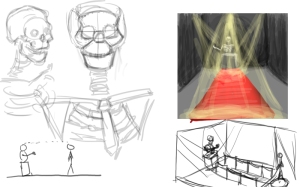

Our goal is to explore the concept of prejudice.

Toying with the idea of opinions formed unjustly, the idea came up of a

humanoid figure sitting on a pedestal of sorts, which can only be approached

by one person at a time and only through walking a specific carpeted road.

However, to approach this figure, one must first get permission. The carpet

is preceded by a sign that either reads “Come forward” or “Don’t come

forward”, depending on the piece’s prejudices based on height.

The spectator may wonder what’s going on. If instructed to come forward, he

or she may walk up to the figure and shake its hand and a reward will be

received. If instructed not to come forward, he or she may walk away, or go

towards the figure anyway and shake its hand, which will slowly modify the

machine’s judgment towards similar people.

After trying multiple times on this exercise I decided I did not know how the weka classifying works and gave up. I had trouble changing the data from digits to my ad data and I couldn’t get it to take in any kind of image bigger than 10×10. How can I apply the histogram similarity sorting to the training data?

This is the idea mock up version:

Decades

This is the farthest sort of working version I got…the rest just didn’t do anything:

Decades 2

Are We There Yet?; The Pursuit of the American Dream

Are We There Yet?; The Pursuit of the American Dream

This piece serves as a kind of open-ended barometer of the state of mind of the U.S., “measuring” how close we are today to our understanding of the American Dream.

The piece makes this assessment based on the headlines of the day on the New York Times. The applet was trained on the NYTimes headlines from January 2009, and I classified each headline as evidence that we’re living the American Dream (“good”), or as evidence that the American Dream is not a reality yet (“bad”). TF-IDF was used to find the most important words of each headline and to compare to the day’s headlines.

Everyday the headlines are downloaded and classified by finding the labels of the nearest neighbors (most similar training headlines). The ratio of “good” vs “bad” headlines is displayed as a line across the digital canvas (up = American Dream). Currently the applet is hard coded to use the headlines of the presentation day (March 17th). The code for finding the current date was found, but there wasn’t time to put the date into the needed form.

The open-ended appearance intends to encourage viewers to interpret the messages “not there yet” or “almost there” according to their own beliefs and ideals.

Interesting ways to grow:

– create a web widget for NYTimes where people would get to rate headlines, maybe with a scale. This would multiply the training data received and “sharpen” the classifier. Also the classifier then would reflect a collective notion of the American Dream instead of only mine.

– Visualize significant words that are associated or in conflict with the American Dream

The classifier works in the sense that it is generating labels, but it’s not very meaningful. Several problems are occurring:

– NYTimes data has many bugs, so lots of data is getting lost

– The training data is very biased because it is so limited in time (1 month) and it’s mostly 90% “bad”

– The headlines were chosen as the material to observe because each word in a headline is very carefully chosen but it might be that it’s not enough data to accurately predict the content of the piece. An alternative is to use the body of the article instead.

Download zip here: http://joanaricou.com/nytimes_tutorial4.zip

http://paulshen.name/sketches/4

Paul Shen

http://paulshen.name/sketches/6

Paul Shen

http://paulshen.name/sketches/2

paul shen