The Curator

Polo Chau

François Chu

Sue Ann Hong

Patrick Gage Kelley

Art That Learns 2009.

Introduction

This installation explores the act of acceptance and rejection, of the artist, and the influence of institutions such as museums and galleries on artists’ directions as well as that of artists on such institutions.

Here, a computer automatically decides whether to add to its collection simple drawings submitted by the audience. While various criteria are used in judgment and reception of art in our society, “The Curator” simulates a simple aspect: originality.

It uses a machine learning algorithm based on anomaly detection to determine the acceptance of pieces, rejecting the ones similar to those already seen. (Also, to simulate the concept of revival, after a time, older pieces are forgotten by the algorithm.)

Hence the computer adapts to respond to the artistic ideas given by the audience, while we hope that the decisions made by the computer shape the submissions as the audience attempts to please the machine, and avoid brutal rejection.

The Process

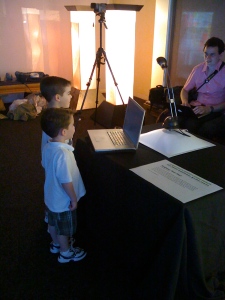

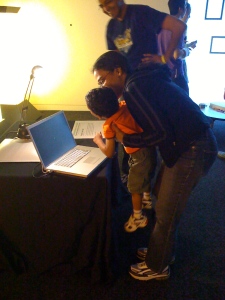

Our design was an iterative process from the beginning. The idea, a cousin to that of an early concept presented to the class referred to as “Lack,” incorporated children by allowing them to create their own artworks which would be appraised.

Early on we decided to have two end states, an acceptance, and a rejection, where work would be destroyed. However the physical incarnations changed, from clear acrylic boxes to the idea of the shreds simply tumbling to the floor.

The best example of our attempts to build simplicity into our design come from the submission module. We knew there needed to be a way for the children to enter their work into the machine, however the specifics of this mechanism changed frequently. From a fed document strip scanner, to a vertical slot, a horizontal slot, a deconstructed flatbed scanner, we in the end went with a design that was a single slot in a piece of acrylic.

Our physical construction involved laser cutting, wood work, bending acrylic, priming, painting, the deconstruction of a mouse, the deconstruction of a shredder, and finally electrical work.

The final circuit used was simple, an arduino powered two servos, one for acceptance, the other for rejection, and additionally a relay, through a transistor, powered by a 9V battery, to control the timing of the shredder (whose automatic mechanism & safety switch were removed).

The shredder itself was deconstructed and rebuilt inside of a clear acrylic box.

For the learning algorithm we use a simple anomaly detection algorithm. We keep around the last n (we use 30) drawings in the form of a orthonormal basis: every time we get a drawing, we compare its feature vector (of pixels) v to the existing basis vectors (except the oldest one, which is what we’re replacing), and store the component of v orthogonal to them. Before creating the new basis vector, we test the drawing for acceptance by projecting onto the space of the orthonormal basis, then compute the reconstruction error. If the reconstruction error is greater than our threshold for acceptance, then the drawing is accepted. Intuitively, if the current drawing can be described well by the last 30 drawings (and hence not “original”), we do not accept it. Note that since the feature space is much larger (300×200=60,000?) than the basis space, the basis should not span the space of drawings.

We also learn the threshold for acceptance over time to maintain the specified acceptance rate (e.g. we used 40%). After deciding the fate of each drawing, we set the threshold such that the threshold would have accepted 40% of the last 30 drawings.

The Installation.

The installation was reasonably straight forward. We late in the process found out about the light up wall that would be the backdrop of our work, added frames to compensate for the space, which increased the overall impact of the artwork, and added a second stage of “acceptance” (albeit, a human stage, not one controlled by learning).

We made a few changes during the process including changing the timing of the button (adding a delay), adjusting the acceptance threshold, and fixing one of the servos which was overdrawing power from the arduino.

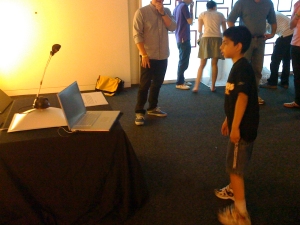

Observations.

One success is that we were actually able to get children’s attention and keep it, possibly for too long. Many parents had to take their children away from the exhibit. Children were pleased with acceptance and rejection, the clear box was a sign of winning, but the shredder was fun, loud, and visceral. As everything was a reward, they wanted to keep drawing, sometimes to the point of mass producing scribbles…, artwork.

Conclusion

We are going to call this a success, children liked it, no one cried, no one got hurt, it was able to stay in the museum, the learning worked (well enough), and it photographed well. The biggest failure is that it likely did not truly accomplish it’s intended reflection on rejection in society, but with six year olds, this may be a near impossible task to accomplish.

SYNCHRONIZATION

SYNCHRONIZATION